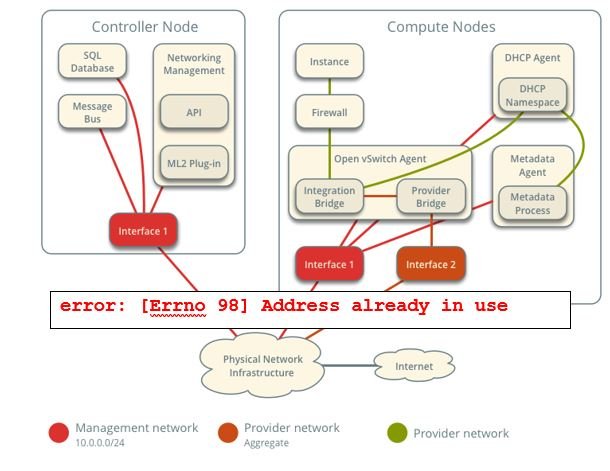

Dear friends today We will discuss about this error neutron.plugins.ml2.drivers.openvswitch.agent.ovs_neutron_agent [-] Switch connection timeout Agent terminated!

Switch connection timeout Problem

We are not able to start openvswitch agent in OVS compute node its starting mode. when we try to restart its providing below error: –

2020-07-15 07:40:30.016 31727 INFO neutron.common.config [-] Logging enabled! 2020-07-15 07:40:30.016 31727 INFO neutron.common.config [-] /usr/bin/neutron-openvswitch-agent version 12.0.4.dev31 2020-07-15 07:40:30.017 31727 INFO ryu.base.app_manager [-] loading app neutron.plugins.ml2.drivers.openvswitch.agent.openflow.native.ovs_ryuapp 2020-07-15 07:40:30.371 31727 INFO ryu.base.app_manager [-] loading app ryu.app.ofctl.service 2020-07-15 07:40:30.372 31727 INFO ryu.base.app_manager [-] loading app ryu.controller.ofp_handler 2020-07-15 07:40:30.373 31727 INFO ryu.base.app_manager [-] instantiating app neutron.plugins.ml2.drivers.openvswitch.agent.openflow.native.ovs_ryuapp of OVSNeutronAgentRyuApp 2020-07-15 07:40:30.373 31727 INFO ryu.base.app_manager [-] instantiating app ryu.controller.ofp_handler of OFPHandler 2020-07-15 07:40:30.373 31727 INFO ryu.base.app_manager [-] instantiating app ryu.app.ofctl.service of OfctlService 2020-07-15 07:40:30.377 31727 INFO neutron.agent.agent_extensions_manager [-] Loaded agent extensions: ['qos'] 2020-07-15 07:40:30.379 31727 WARNING oslo_config.cfg [-] Option "rabbit_port" from group "oslo_messaging_rabbit" is deprecated for removal (Replaced by [DEFAULT]/transport_url). Its value may be silently ignored in the future. 2020-07-15 07:40:30.380 31727 WARNING oslo_config.cfg [-] Option "rabbit_password" from group "oslo_messaging_rabbit" is deprecated for removal (Replaced by [DEFAULT]/transport_url). Its value may be silently ignored in the future. 2020-07-15 07:40:30.380 31727 WARNING oslo_config.cfg [-] Option "rabbit_userid" from group "oslo_messaging_rabbit" is deprecated for removal (Replaced by [DEFAULT]/transport_url). Its value may be silently ignored in the future. 2020-07-15 07:40:30.390 31727 ERROR ryu.lib.hub [-] hub: uncaught exception: Traceback (most recent call last): File "/usr/lib/python2.7/site-packages/ryu/lib/hub.py", line 60, in _launch return func(*args, *kwargs) File "/usr/lib/python2.7/site-packages/ryu/controller/controller.py", line 100, in call self.ofp_ssl_listen_port) File "/usr/lib/python2.7/site-packages/ryu/controller/controller.py", line 123, in server_loop datapath_connection_factory) File "/usr/lib/python2.7/site-packages/ryu/lib/hub.py", line 127, in init self.server = eventlet.listen(listen_info) File "/usr/lib/python2.7/site-packages/eventlet/convenience.py", line 47, in listen sock.listen(backlog) File "/usr/lib64/python2.7/socket.py", line 224, in meth return getattr(self._sock,name)(args) error: [Errno 98] Address already in use : error: [Errno 98] Address already in use 2020-07-15 07:40:30.530 31727 INFO neutron.plugins.ml2.drivers.openvswitch.agent.openflow.native.ovs_bridge [-] Bridge br-int has datapath-ID 00006afbd9d91544 2020-07-15 07:41:00.542 31727 ERROR neutron.plugins.ml2.drivers.openvswitch.agent.openflow.native.ofswitch [-] Switch connection timeout 2020-07-15 07:41:00.543 31727 ERROR neutron.plugins.ml2.drivers.openvswitch.agent.ovs_neutron_agent [-] Switch connection timeout Agent terminated!: RuntimeError: Switch connection timeout

When you will run “neutron agent-list” then you can find neutron-openvswitch-agent is started on OVS compute node. But when you will checked “sudo docker ps –all |grep neutron” then you can find neutron-openvswitch-agent is not started on OVS compute node and its starting mode.

[urclouds-admin@overcloud-controller-0 (overcloudrc) ~]$ neutron agent-list neutron CLI is deprecated and will be removed in the future. Use openstack CLI instead. +--------------------------------------+--------------------+--------------------------------------------------+-------------------+-------+----------------+---------------------------+ | id | agent_type | host | availability_zone | alive | admin_state_up | binary | +--------------------------------------+--------------------+--------------------------------------------------+-------------------+-------+----------------+---------------------------+ | 06876570-06d4-46d3-b3bf-ecee44c0f52b | NIC Switch agent | overcloud-ovscompute-2.localdomain | | xxx | True | neutron-sriov-nic-agent | | 08167a3a-20b8-4f12-83d3-505044defc78 | Open vSwitch agent | overcloud-ovscompute-13.localdomain | | :-) | True | neutron-openvswitch-agent | | 086b8c3b-d6bd-4c7e-845a-a873d31d4699 | Open vSwitch agent | overcloud-ovscompute-15.localdomain | | :-) | True | neutron-openvswitch-agent | +--------------------------------------+--------------------+--------------------------------------------------+-------------------+-------+----------------+---------------------------+ [urclouds-admin@overcloud-controller-0 (overcloudrc) ~]$

1 Solution for this issue

You can try below solution for this issue:-

Non working controller: –

root@overcloud-controller-2 neutron]# netstat -tulpn |grep 6633 tcp 51 0 127.0.0.1:6633 0.0.0.0:* LISTEN 170970/sudo [root@overcloud-controller-2 neutron]# [root@overcloud-controller-2 neutron]# ps aux |grep 170970 root 170970 0.0 0.0 193332 2792 ? S Apr11 0:00 sudo neutron-rootwrap-daemon /etc/neutron/rootwrap.conf

Working controller: –

[root@overcloud-controller-1 ~]# netstat -tulpn |grep 6633

tcp 0 0 127.0.0.1:6633 0.0.0.0:* LISTEN 275273/python2

[root@overcloud-controller-1 ~]#

[root@overcloud-controller-1 ~]# ps aux|grep 275273

neutron 275273 5.6 0.0 394056 99824 ? Ss Apr19 1770:07 /usr/bin/python2 /usr/bin/neutron-openvswitch-agent --config-file /usr/share/neutron/neutron-dist.conf --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/openvswitch_agent.ini --config-dir /etc/neutron/conf.d/common --config-dir /etc/neutron/conf.d/neutron-openvswitch-agent --log-file /var/log/neutron/openvswitch-agent.log

You can kill rootwrap on controller2 to allow neutron-openv to start up correctly. Then you can find Open vSwitch agent successfully started on controller2. As you can see in below: –

+--------------------------------------+--------------------+------------------------------------+-------------------+-------+----------------+---------------------------+

| id | agent_type | host | availability_zone | alive | admin_state_up | binary |

+--------------------------------------+--------------------+------------------------------------+-------------------+-------+----------------+---------------------------+

| 0b42df33-b5dc-4ffe-b452-9d4eaf2f2701 | L3 agent | overcloud-controller-1.localdomain | nova | :-) | True | neutron-l3-agent |

| 1afbdf75-05d0-4f10-bab1-380c3ce846bc | Open vSwitch agent | overcloud-controller-2.localdomain | | :-) | True | neutron-openvswitch-agent |

For more details of this solution you can Click-Here

2 Solution for this issue

In my case neutron-openvswitch-agent service is running as container service, and it should be started through docker.

But my mistake it was started by systemctl after reboot the compute node. like below: –

[stack@undercloud (stackrc) ~]$ salt "*omput*" cmd.run " sudo systemctl status neutron-openvswitch-agent"

ovscompute-15:

* neutron-openvswitch-agent.service - OpenStack Neutron Open vSwitch Agent

Loaded: loaded (/usr/lib/systemd/system/neutron-openvswitch-agent.service; enabled; vendor preset: disabled)

Active: active (running) since Tue 2020-07-21 07:07:36 UTC; 2 days ago

Process: 20424 ExecStartPre=/usr/bin/neutron-enable-bridge-firewall.sh (code=exited, status=0/SUCCESS)

Main PID: 20430 (neutron-openvsw)

Tasks: 8

Memory: 129.4M

CGroup: /system.slice/neutron-openvswitch-agent.service

|- 5343 ovsdb-client monitor tcp:127.0.0.1:6640 Interface name,ofport,external_ids --format=json

|- 5345 ovsdb-client monitor tcp:127.0.0.1:6640 Bridge name --format=json

|-14158 ovsdb-client monitor tcp:127.0.0.1:6640 Interface name,ofport,external_ids --format=json

|-14160 ovsdb-client monitor tcp:127.0.0.1:6640 Bridge name --format=json

|-20430 /usr/bin/python2 /usr/bin/neutron-openvswitch-agent --config-file /usr/share/neutron/neutron-dist.conf --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/openvswitch_agent.ini --config-dir /etc/neutron/conf.d/common --config-dir /etc/neutron/conf.d/neutron-openvswitch-agent --log-file /var/log/neutron/openvswitch-agent.log

|-20518 ovsdb-client monitor tcp:127.0.0.1:6640 Interface name,ofport,external_ids --format=json

`-20520 ovsdb-client monitor tcp:127.0.0.1:6640 Bridge name --format=json

Jul 21 07:07:36 overcloud-ovscompute-15 systemd[1]: Starting OpenStack Neutron Open vSwitch Agent...

Jul 21 07:07:36 overcloud-ovscompute-15 neutron-enable-bridge-firewall.sh[20424]: net.bridge.bridge-nf-call-iptables = 1

Jul 21 07:07:36 overcloud-ovscompute-15 neutron-enable-bridge-firewall.sh[20424]: net.bridge.bridge-nf-call-ip6tables = 1

Jul 21 07:07:36 overcloud-ovscompute-15 systemd[1]: Started OpenStack Neutron Open vSwitch Agent.

Jul 21 07:07:39 overcloud-ovscompute-15 sudo[20493]: neutron : TTY=unknown ; PWD=/ ; USER=root ; COMMAND=/bin/neutron-rootwrap-daemon /etc/neutron/rootwrap.conf

Jul 21 07:07:39 overcloud-ovscompute-15 ovs-vsctl[20517]: ovs|00001|vsctl|INFO|Called as /bin/ovs-vsctl --timeout=5 --id=@manager -- create Manager "target=\"ptcp:6640:127.0.0.1\"" -- add Open_vSwitch . manager_options @manager

Jul 21 07:07:39 overcloud-ovscompute-15 ovs-vsctl[20517]: ovs|00002|ovsdb_idl|WARN|transaction error: {"details":"Transaction causes multiple rows in \"Manager\" table to have identical values (\"ptcp:6640:127.0.0.1\") for index on column \"target\". First row, with UUID f083b111-2ecc-444a-b6d7-556920f80f41, existed in the database before this transaction and was not modified by the transaction. Second row, with UUID 2dd55430-7d1d-46c8-83c0-f1cdc72118ca, was inserted by this transaction.","error":"constraint violation"}

Jul 21 07:07:39 overcloud-ovscompute-15 ovs-vsctl[20517]: ovs|00003|db_ctl_base|ERR|transaction error: {"details":"Transaction causes multiple rows in \"Manager\" table to have identical values (\"ptcp:6640:127.0.0.1\") for index on column \"target\". First row, with UUID f083b111-2ecc-444a-b6d7-556920f80f41, existed in the database before this transaction and was not modified by the transaction. Second row, with UUID 2dd55430-7d1d-46c8-83c0-f1cdc72118ca, was inserted by this transaction.","error":"constraint violation"}

Jul 21 07:07:39 overcloud-ovscompute-15 ovs-vsctl[20519]: ovs|00001|vsctl|INFO|Called as /bin/ovs-vsctl --timeout=5 --id=@manager -- create Manager "target=\"ptcp:6640:127.0.0.1\"" -- add Open_vSwitch . manager_options @manager

Jul 21 07:07:39 overcloud-ovscompute-15 ovs-vsctl[20519]: ovs|00002|ovsdb_idl|WARN|transaction error: {"details":"Transaction causes multiple rows in \"Manager\" table to have identical values (\"ptcp:6640:127.0.0.1\") for index on column \"target\". First row, with UUID f083b111-2ecc-444a-b6d7-556920f80f41, existed in the database before this transaction and was not modified by the transaction. Second row, with UUID 92a15794-89fb-4b95-bdab-d84c3a9f9169, was inserted by this transaction.","error":"constraint violation"}

Then I have to stop and disabled “neutron-openvswitch-agent” through systemctl. Like below:-

sudo systemctl stop neutron-openvswitch-agent sudo systemctl disable neutron-openvswitch-agent

After that I have started my “neutron_ovs_agent” service through docker. Then neutron_ovs_agent service has been stared successfully. You can see below: –

sudo docker restart neutron_ovs_agent

ovscompute-15:

831e35adcb02 172.31.0.1:8787/cbis/centos-binary-neutron-openvswitch-agent:queens-latest "kolla_start" 13 months ago Up 15 hours (healthy) neutron_ovs_agent

4c4e61eea4cd 172.31.0.1:8787/cbis/centos-binary-neutron-server:queens-latest "puppet apply --mo…" 13 months ago Exited (0) 13 months ago neutron_ovs_bridge

So this was the solution of this (error: [Errno 98] Address already in use) in openvswitch-agent service.

You can also check this link.

Fantastic web site. Plenty of helpful information here. I am sending it to a few friends ans also sharing in delicious. And naturally, thanks on your effort!

Hi there! This post couldn’t be written any better! Reading through this post reminds me of my previous room mate! He always kept talking about this. I will forward this article to him. Pretty sure he will have a good read. Thank you for sharing!

Hello.This article was extremely fascinating, particularly because I was searching for thoughts on this matter last week.

A lot of of the things you point out is supprisingly appropriate and that makes me ponder why I had not looked at this with this light previously. This article really did switch the light on for me as far as this subject goes. However there is one particular point I am not necessarily too comfortable with and whilst I make an effort to reconcile that with the core idea of your position, permit me see just what all the rest of your subscribers have to say.Very well done.

thank you so much for this awe-inspiring internet site me and my class preferent this content and brainstorm

Hey, you used to write excellent, but the last few posts have been kinda boring?K I miss your tremendous writings. Past several posts are just a little bit out of track! come on!